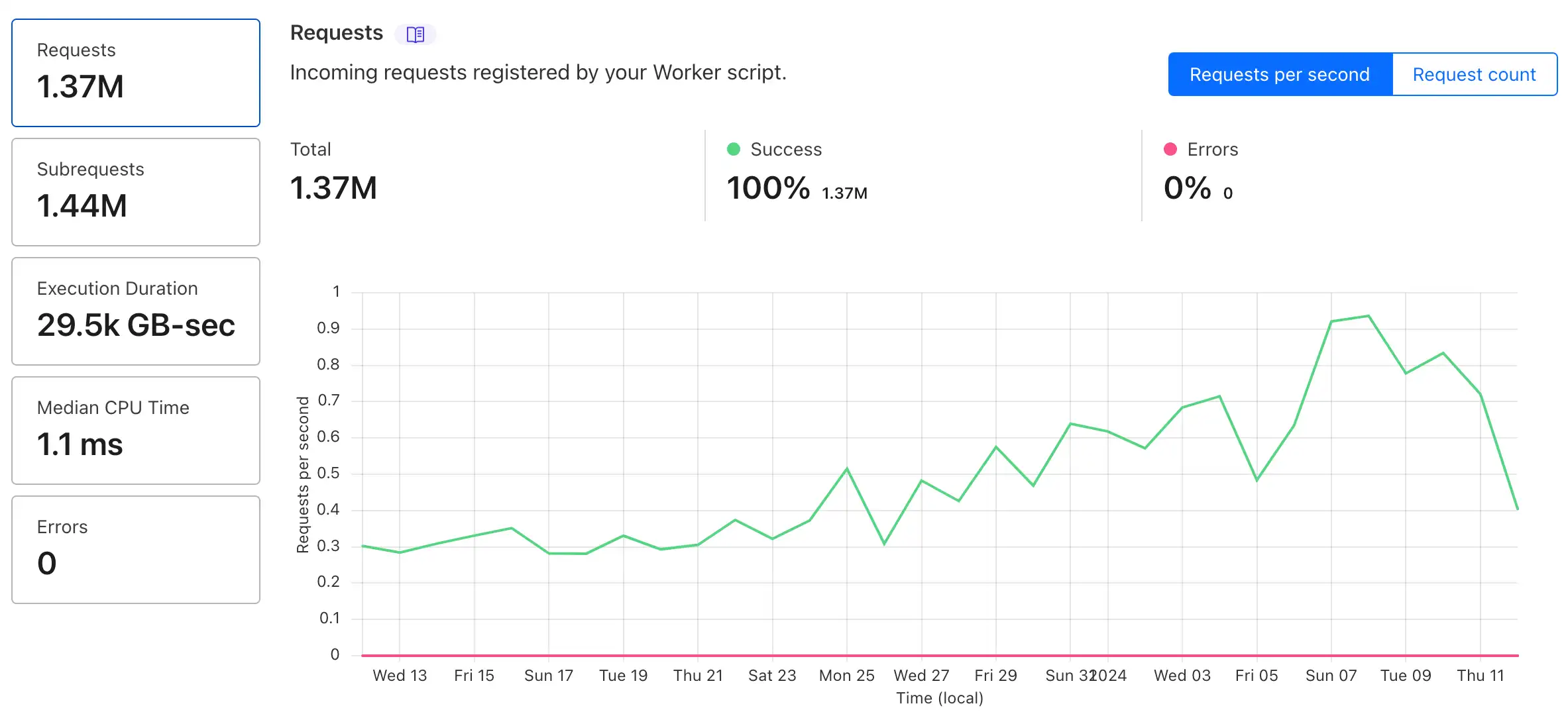

The execution time of Stickystats 'worker' script, that handles every single request is running with a median of 1.3ms (CPU time), when running at scale. Shweeeet! This is around 22MB of memory per request.

I'm not normally quite so excited about how stuff turns out. What we do is engineering, and it's stuff we've done literally hundreds, if not thousands of times before. However, this time, I'm extra pleased with how a particular solo-project turned out; because I was astonished at the difference running on Edge has made. I literally cannot afford to run this project on traditional Azure of AWS cloud, it just doesn't scale enough.

The bot management solution I built is called stickystats; Responsible for providing rudimentary stats, but evolved to become a firewall and bot (bolt on service) to protect a professional domain portfolio, and most importantly reduce my running and parking costs; which it has succesfully done by more than a factor of 10. Edge is fast; I mean, really fast!

The execution time of Stickystats 'worker' script, that handles every single request is running with a median of 1.3ms (CPU time), when running at scale. Shweeeet! This is around 22MB of memory per request.

To put this in perspective coming from a .NET C# background; this runs and delivers similar value to what I would have had to have had a cluster of services running HOT (warmed up) with close to no COLD start times and close to zero errors. No cheap servers that can be reclaimed by other higher priority jobs; just ...pure raw cost effective power.

This is especially good news since the PAYG model I'm using is based on total CPU execution time.

Given that Azure rounds up memory usage to the nearest 128MB,

AND (also for Azure)

(ignoring the fact that Cloudflare services are already a huge saving) the CPU RAM saving alone opens up massive long term opportunities for Goblinfactory. (Compare that to my 1.3ms execution times on Cloudflare!)

The business need was to mitigate DDOS and hacking attempts at scale and lower costs. The project is live on 231 domains; The early volumes are around 2M requests per months, with an Avg p99 latency of 22.91ms (when logged), and max P99 of 233ms.

While the forecasted load for this project is going to grow massively as this allows me to rapidly onboard more applications, by running on edge on Cloudflare I expect performance to remain the same even at 10 or 100 times my current loads with zero change to my architecture, devops or tests.

Update 11 Jun '24 - Erratta

Some important corrections to the billing details on Cloudflare.

The report shown in this blog post contains a strange Cloudflare

legacy

value,Execution Duration

with an odd value of 29.5K GB-sec. This is apparently the wall clock time for all requests. (tbc) And is not what is used to determine pricing on the new pricing model.Cloudflare

of memory usage per request, but that pricing model has since been deprecated (simplified) more info on latest pricing here : https://developers.cloudflare.com/workers/platformpricing New pricing effectively charges only for milliseconds.

I've had discussions about the RAM and it sounds like (tbd) that GB-Sec billing is deprecated, and you're limited to 128Mb of ram usage, so from that perspective it's the same as Azure.

In theory this does simplify pricing, I will probably come back and update this with some more notes later.

What is still different to Azure is the lower minimum of ms billed, allowing you to be rewarded for writing optimal code.

Also see this announcement ; New Workers pricing - never pay to wait on I/O again